DSLR Camera Software

Background

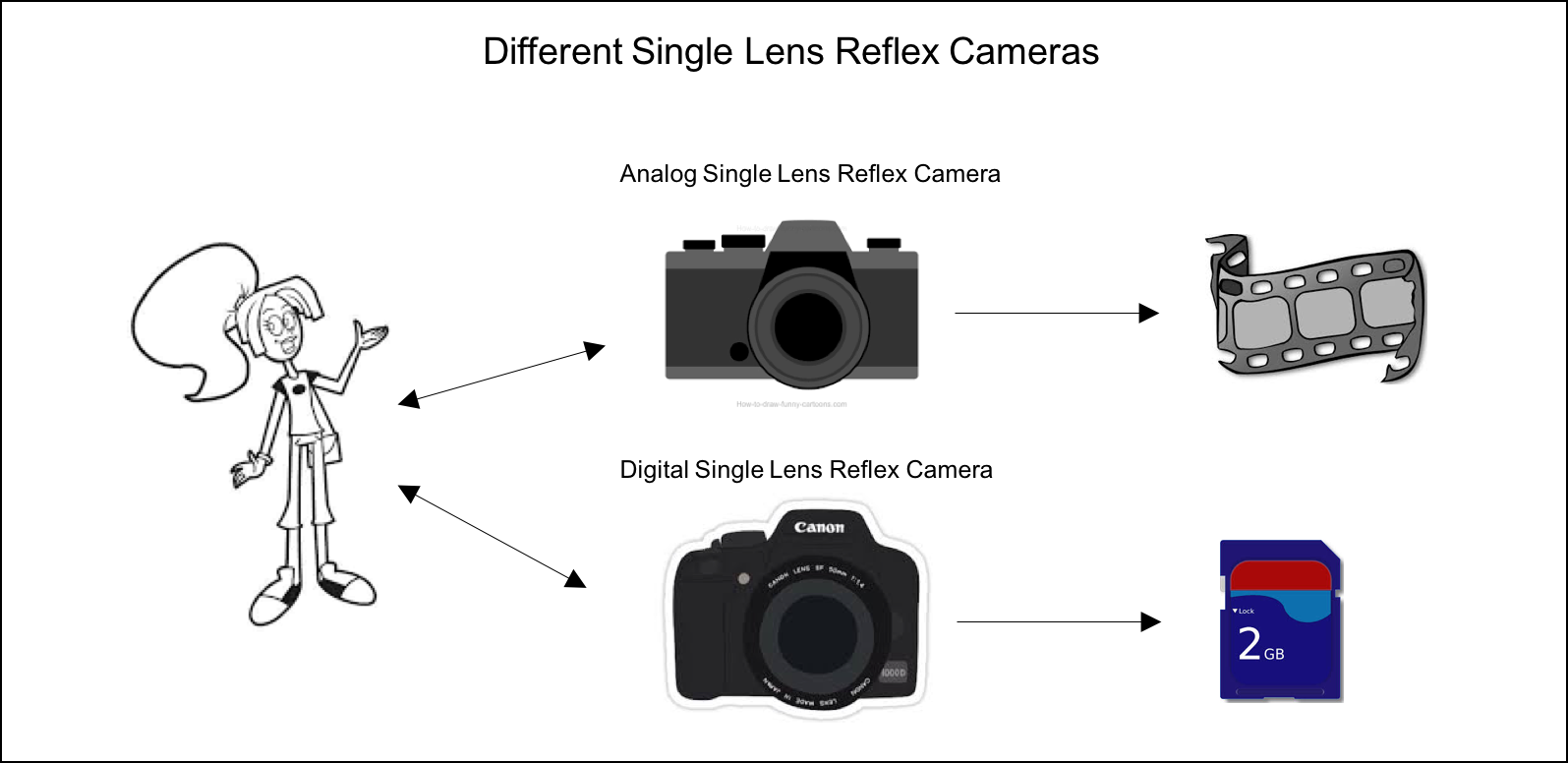

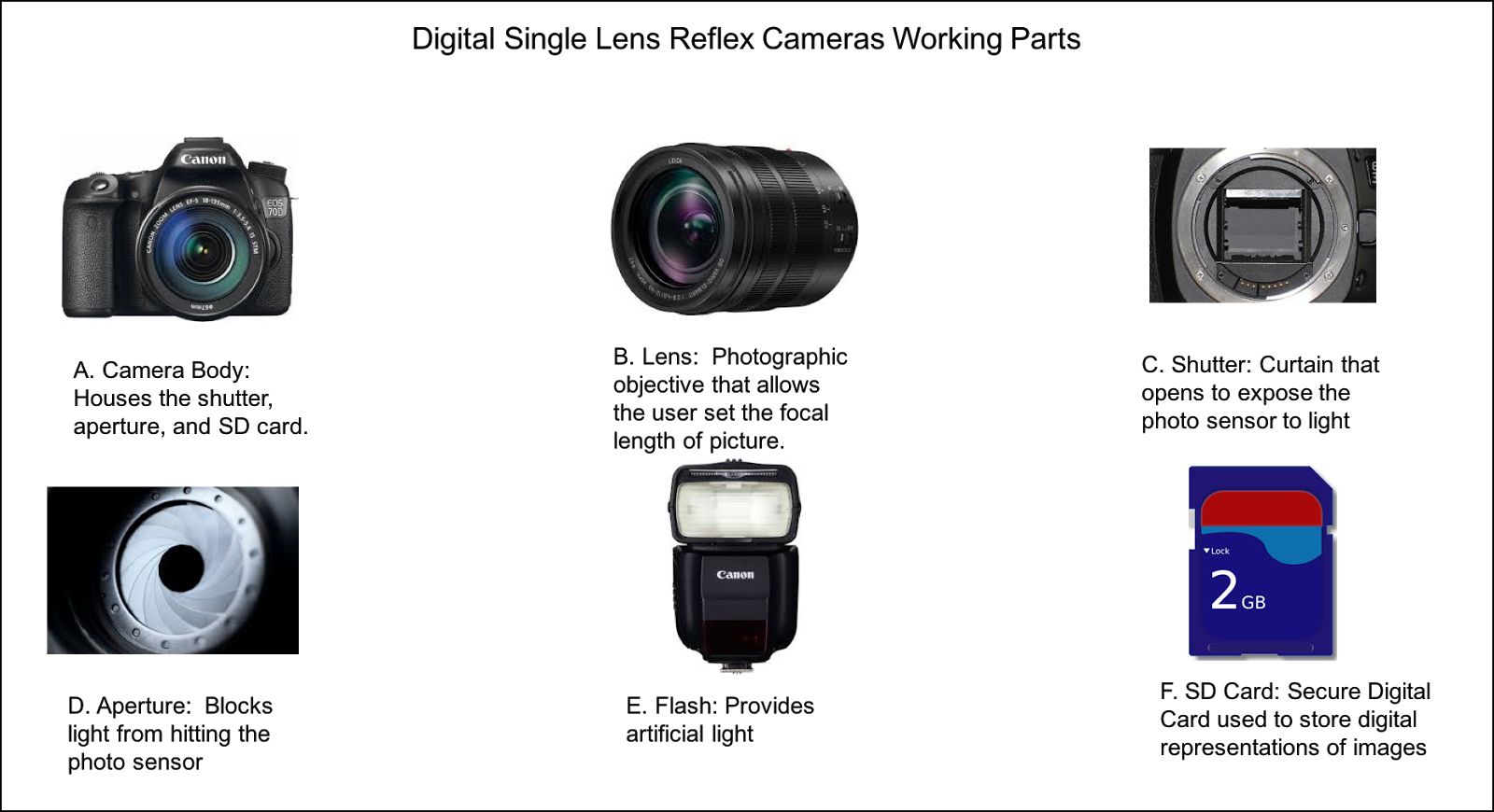

A DSLR - or Digital Single Lens Reflex - camera is a digital version of the widely popular SLR or Single Lens Reflex camera. The most significant difference between the two devices is that the SLR camera stores the images it captures to cellulose based film while the DSLR camera stores its images to persistent digital storage such as secure digital (SD) cards (See Figure 1). Both devices are targeted at slightly different end users. The film based models of this camera have traditionally been used by those with experience in manually operating a camera. Manual use of a camera requires the user to specifically set the aperture, flash, light sensitivity (ISO) of the film (or sensor, in the case of DSLRs), shutter speed, and choose the lens of the device in order to capture a picture (See Figure 2). The DSLR camera attempts to widen the user base to include not only the professional photographer who is well versed in the operation of the device, but also to amateur photographers and individuals with little to no experience operating a camera.

(Figure 1) Analog vs. Digital Photo Capture

(Figure 2) Anatomy of a Camera

Use Cases

The primary intended user of the DSLR camera system that is being designed for this project is the beginning to novice photographer who has little to no experience manually operating a DSLR camera. The secondary intended user is the photographer with intermediate experience operating a DSLR camera. Below, we examine the most common usage scenario for each target user.

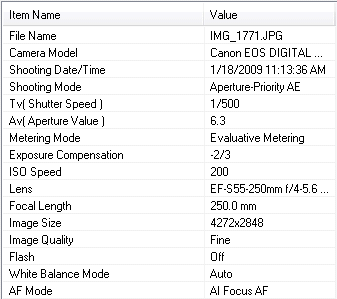

Jim is a thirty-four year old father of an eleven year-old boy and nine year old girl. Jim’s son is an avid soccer player who is in his fifth year of playing with the local recreation league team. Jim’s daughter not only takes piano lessons where she performs in two recitals a year, but is also in her second year of ballet lessons where she participates in the annual holiday show. Jim has, up until this point, used the camera on his smartphone to record his children’s events. While this works well for the family to build their personal photo albums, Jim would like to take higher quality pictures to put in a family photo album, and also so he can brag on his children to his friends and co-workers. Jim would like to be able to capture high quality images like those commonly produced by a DSLR camera but he has no experience setting up the camera to take these kinds of pictures. Jim wants a DSLR camera that he can turn on, point at the subject of the picture, and take the picture. Jim would like to be able to capture time and location of the pictures so he can keep them organized in photo albums (Figure 3). Finally, Jim would like to be able to easily move the pictures from the camera to his computer.

(Figure 3) An example of image metadata, commonly known as EXIF (Exchangeable Image File) data

Ashley is a forty-year-old working professional who enjoys landscape photography and for a small fee, takes sports team and individual player pictures for the local recreation sports leagues. Ashley knows her way around the manual operation of a DSLR camera. She has taken a couple of photography classes to learn how to operate a DSLR camera in different outdoor settings and lighting conditions. Ashley needs a camera that she can take full control of, manually setting the aperture, shutter speed, light sensitivity (ISO), whether or not the flash will engage, and focus in the capturing of a picture. Like Jim, Ashley would like to be able to capture location and date and time a picture was taken. Unlike Jim, however, Ashley would like the ability to make edits to the pictures while they are still on the camera. These edits would be relatively minor, such as noise-removal or a black-and-white filter, in order to have a rough representation of what she plans for the photos when previewing them with her clients.

Requirements

Functional Requirements

FR-1. The camera must be turned on and set to automatic mode in order for the system to control the camera.

FR-2. The camera must be turned on and set to manual mode in order for the system to relinquish control of the camera.

FR-3. The system must support the ability to autofocus the camera. The system must physically manipulate the lens to set the focal point based on the target object of the picture.

FR-4. The system must support the ability to measure the amount of light on the sensor and physically manipulate the aperture based on the amount of light in the environment.

FR-5. The system must support the ability to measure the amount of light on the sensor and physically engage the flash based on the amount of light in the environment.

FR-6. The system must support the ability to measure the amount of light on the sensor and physically manipulate the speed of opening and closing of the shutter based on the amount of light in the environment.

FR-7. The system must persist captured image data to physical storage in the form of a secure digital (SD) card.

FR-8. The system must persist image metadata consisting of camera settings, location coordinates read from an onboard GPS device, as well as the date and time the image was captured, to physical storage in the form of a secure digital (SD) card.

FR-9. The system must have the ability to make basic edits to a stored image:

FR-9.1. Convert image from color to black and white

FR-9.2. Noise Reduction

FR-9.3 Red eye removal

FR-10. When an external device is added to the camera (i.e. external flash), the built in equivalent will not activate and priority will be given to the external device.

Non-Functional Requirements

NFR-1. The system shall be embedded in a camera system running the BusyBox operating system in a Linux kernel.

NFR-2. The system shall be in full operating mode within two seconds of the camera being turned on.

NFR-3. The system shall be in off mode within two seconds of the camera being turned off.

NFR-4. The system shall switch from automatic mode to manual mode in less than one second of the user requesting the change in operating mode.

NFR-5. The system shall be capable of controlling multiple brands of lenses compatible with the camera body lens mount.

NFR-6. The system shall be capable of recovering from a camera or system failure, leaving the camera and system in an operable state.

NFR-7. The system shall be able to persist data to physical storage in less than 1 second.

NFR-8. The system shall have two four-image buffers to allow for a rapid series of pictures to be processed and written to the SD card, respectively.

NFR-9. The system shall be able to activate the shutter to take the picture within 100ms of the user pressing the shutter button.

NFR-10. The system shall be able to convert and store still images in the JPEG format.

High-Level Design

Attempting to design a top-level architecture for a Digital Single Lens Reflex camera is a sizeable task. In reviewing the use cases and requirements from section 1 of this document, we as designers felt a bit overwhelmed and were questioning as to whether or not we had chosen a project that was constrained enough to serve as a learning exercise. As we were struggling to find an architectural style or pattern that was applicable across the full problem domain, we decided that decomposing our problem into smaller, more manageable sub-problems might help us find a good architectural fit. In doing so, we discovered that when you examine a DSLR camera, the functional and nonfunctional requirements naturally fall into one of two categories.

The first category is for items in the problem domain that concern themselves with the physical operation of the components of the camera. Taking a picture with a DSLR camera in automatic mode requires the system to interface with a light sensor and then control the physical manipulation of an aperture, a shutter, a flash, and a lens. See the traceability matrix for the requirements falling into this category.

The second category consists of the aspects from the problem domain that deal with the still images captured by the operation of the camera. Here, we are concerned with converting light waves that are focused on the image sensor to an image that can be persisted to physical storage. We are also concerned with being able to apply basic edits to still images. See the traceability matrix for the requirements falling into this category.

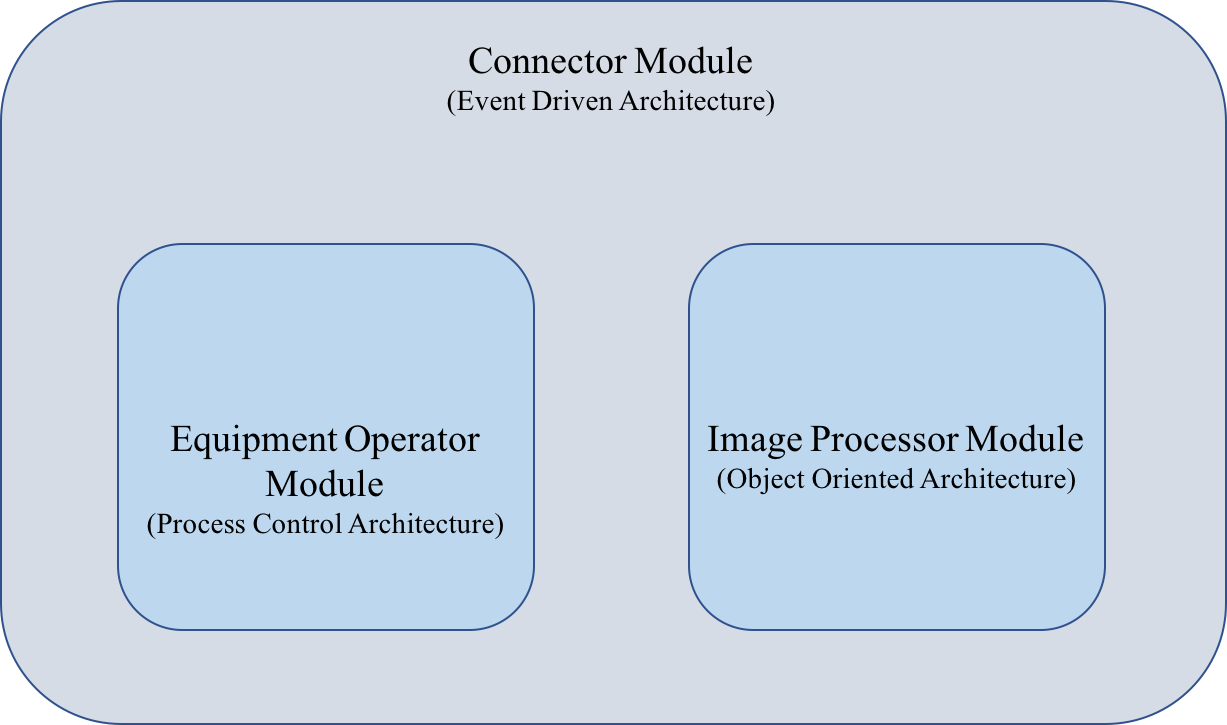

By breaking our problem down into the two categories above, we determined that it is not feasible for one architectural style to guide the design for the full problem domain. Instead, we are required to synthesize a complete architectural design by combining two or more architectural styles. The high-level module view of our overall design is shown in Figure 4 below.

We will break the software system that implements these two categories of requirements for a DSLR camera into three modules. Each module encapsulates, or is responsible for, one aspect of the system. The first module will be responsible for adjusting the physical elements of the camera, the aperture, lens, flash, and triggering the shutter. The second module will take care of persisting and retrieving the digital image to and from physical storage. The third module will control the invocation of the first two modules (Figure 4).

(Figure 4) Module Overview of a DSLR camera system

Modules

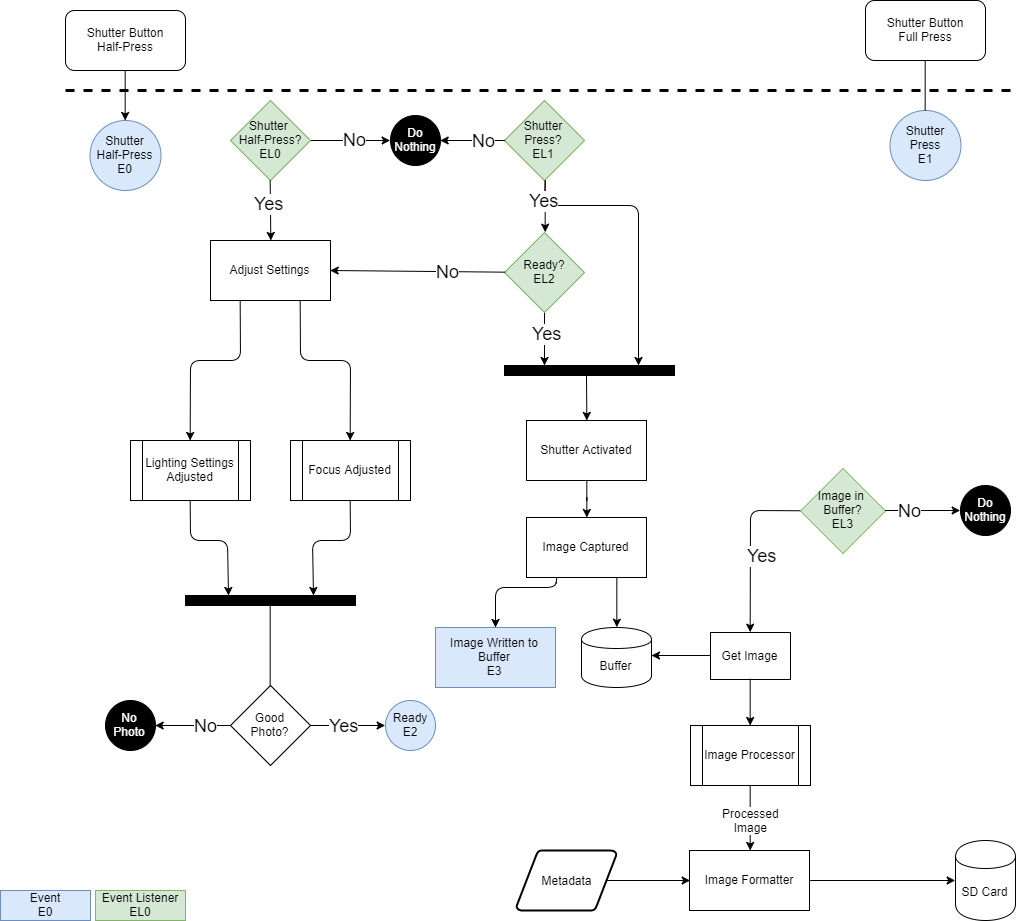

Combining the individual modules together renders a fully functioning software system for a DSLR camera. The basic workflow of the capture image process is as follows (Figure 5):

- The camera operator depresses the shutter button halfway down.

- The aperture and flash are set in response to the light sensed in the environment.

- The focal length of the lens is set based on the distance from the camera to the subject of the photograph.

- The camera operator depresses the shutter button all the way down.

- The image is captured.

- The image is translated to the JPEG format.

- The image is persisted to physical storage.

(Figure 5) High Level Functional Overview

The following sections will detail the architectures of the Equipment Operator, Image Processor, and Controller modules.

Module 1 - Equipment Operator

The equipment module is responsible for adjusting the physical pieces of the camera based on the amount of light in the environment and the distance from the camera to the subject of the photograph. When the Controller Module, (see the Module 3 section below for details of the Controller Module), raises a setEquipment event, the Equipment Operator module will respond by engaging the process control detailed in the next section.

Design Element - DE-1

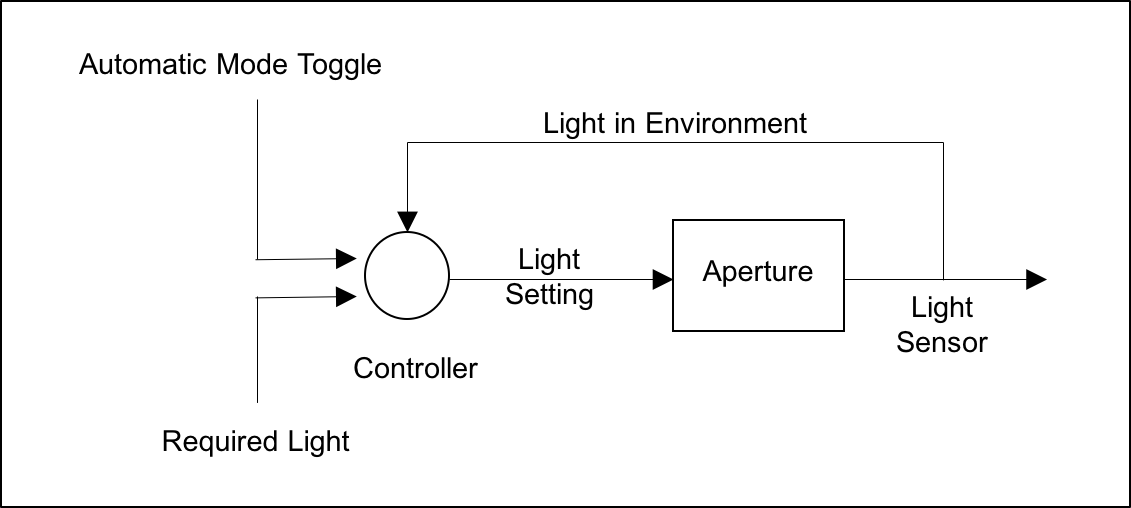

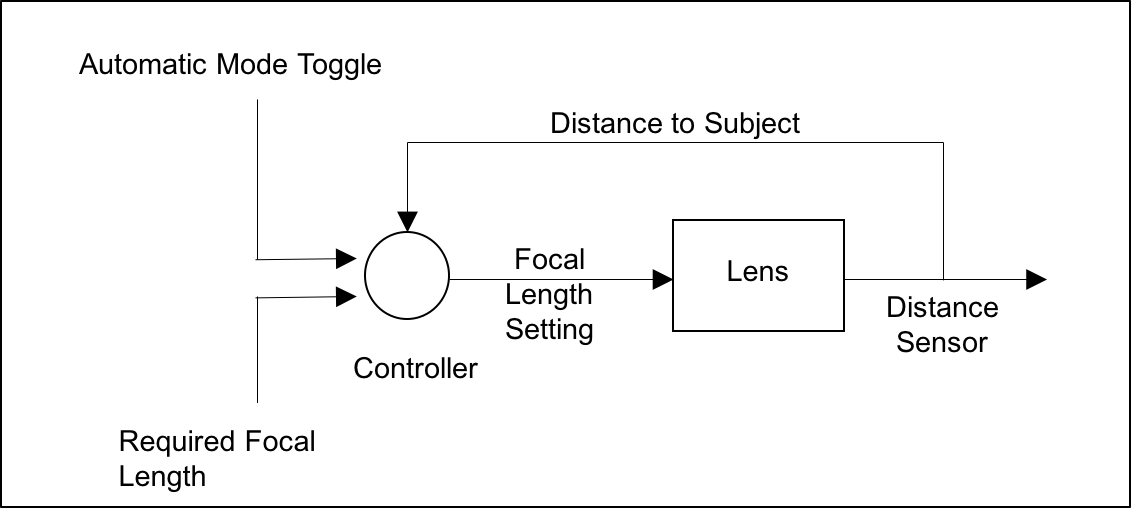

In examining the elements of the problem that module 1 solves, we find that it closely resembles the elements of a closed loop feedback system. Closed loop feedback control systems consist of a problem definition, process input and control variables, a sensor to read the control variable, a set point or target value for the control variable, and an algorithm for controlling the process. Based on the DSLR camera problem space, we will actually have two closed loop feedback controls needed in this module, one for controlling the amount of light required for a photograph and one for controlling the focal length of the lens.

Applying a closed loop feedback control system to our “light and focus” problems we come up with the following process control definitions:

| Problem Element | Light Problem | Focus Problem |

| Problem Definition | The process receives a light measurement as input and open or closes the aperture of the camera and sets the flash to on or off. | The process receives a focal point measurement as input and rotates the lens of the camera. |

| Control Algorithm | Read current amount of light in the environment, compare that value to the amount of light required for the image and make adjustments to the aperture and flash. | Read the current focal length of the lens, compare that value to the focal length required for the image and make adjustment to the lens. |

| Control Variable | Current light value. | Current focal length. |

| Manipulated Variable | Aperture setting. Flash on/off. | Lens focal length setting. |

| Set Point | The camera must be in automatic mode. The set point for the aperture is amount of light needed for the image. The flash is either on or off. | The camera must be in automatic mode. The set point for the lens is the focal length needed to for the subject of the image to be in focus. |

| Sensor for the controlled variable | The controlled variable is the current light value. This data is supplied by a light sensor in the camera. | The controlled variable is the focal length of the lens. This data is supplied by a distance sensor in the camera. |

Translating the process control definitions above into an architecture yields the following:

(Figure 6) Process control architecture for setting an aperture based on a light sensor

Here, the camera is in automatic mode and the shutter button is depressed halfway. The Controller Module raises the setEquipment event. The Equipment Operator Module, having registered as a subscriber of this event is invoked. The system determines the set point value for the amount of light required to capture an image and compares that to the control value or current amount of light being let into the camera. The Equipment Operator Module will continually adjust the aperture until the control value equals the set point. When amount of light being let into the camera (the control value) equals the amount of light needed to capture an image (the set point), the Equipment Operator Module disengages the Aperture setting process control and engages the Focal Length process control. If the aperture is fully open and the light being let into the camera (the control value) is less than the amount of light need to capture an image (the set point), the Equipment Operator Module sets the flash to flash to “on” and disengages the Aperture setting process control and engages the Focal Length process control.

(Figure 7) Process control architecture for setting the focal length of a lens based on a distance sensor

Here, the camera is in automatic mode and the shutter button is depressed halfway. The Controller Module raises the setEquipment event. The Equipment Operator Module, having registered as a subscriber of this event is invoked. The system determines the set point value for the focal length required to capture an image that is in focus and compares that to the control value or current distance from the camera to the subject of the photograph. The Equipment Operator Module will continually adjust the lens until the control value equals the set point. When the focal length of the lens (the control value) equals the focal length required to capture an in focus image (the set point) the Equipment Operator Module disengages the Focal Length setting process control and the Equipment Operator Module is closed.

Module 2 - Image Processor

The Image Processor module is responsible for retrieving raw image data from a buffer, converting that data to the JPEG format, and then persisting that JPEG data to physical storage. In addition to the JPEG data, metadata will also be stored with the image. In addition to the metadata detailed in Figure 3, the image metadata consists of the date and time the image was captured and the longitude and latitude coordinates of the location where the image was captured. When Controller Module, (see the Module 3 section below for details of the Controller Module), raises the captureImage event, the Image Processor Module will respond as detailed in the next section.

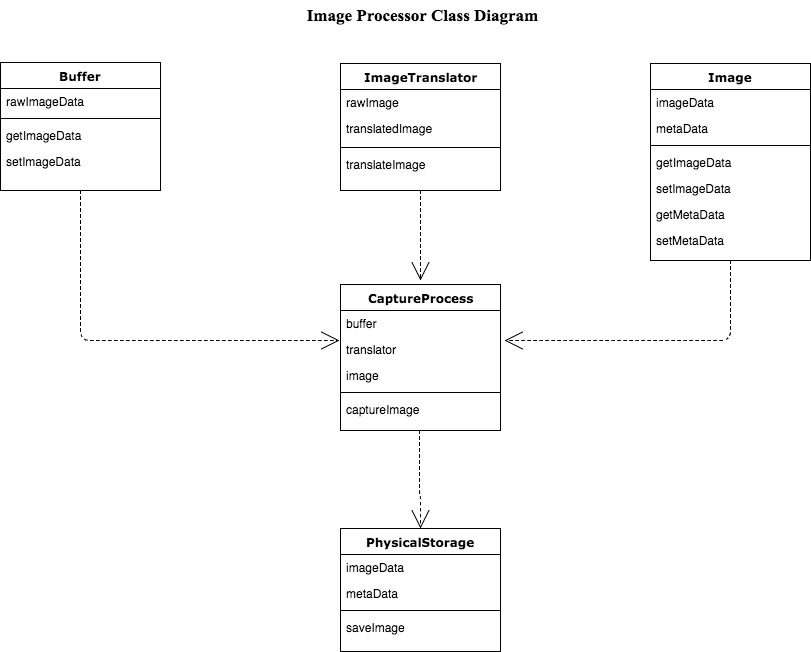

Design Element - DE-2

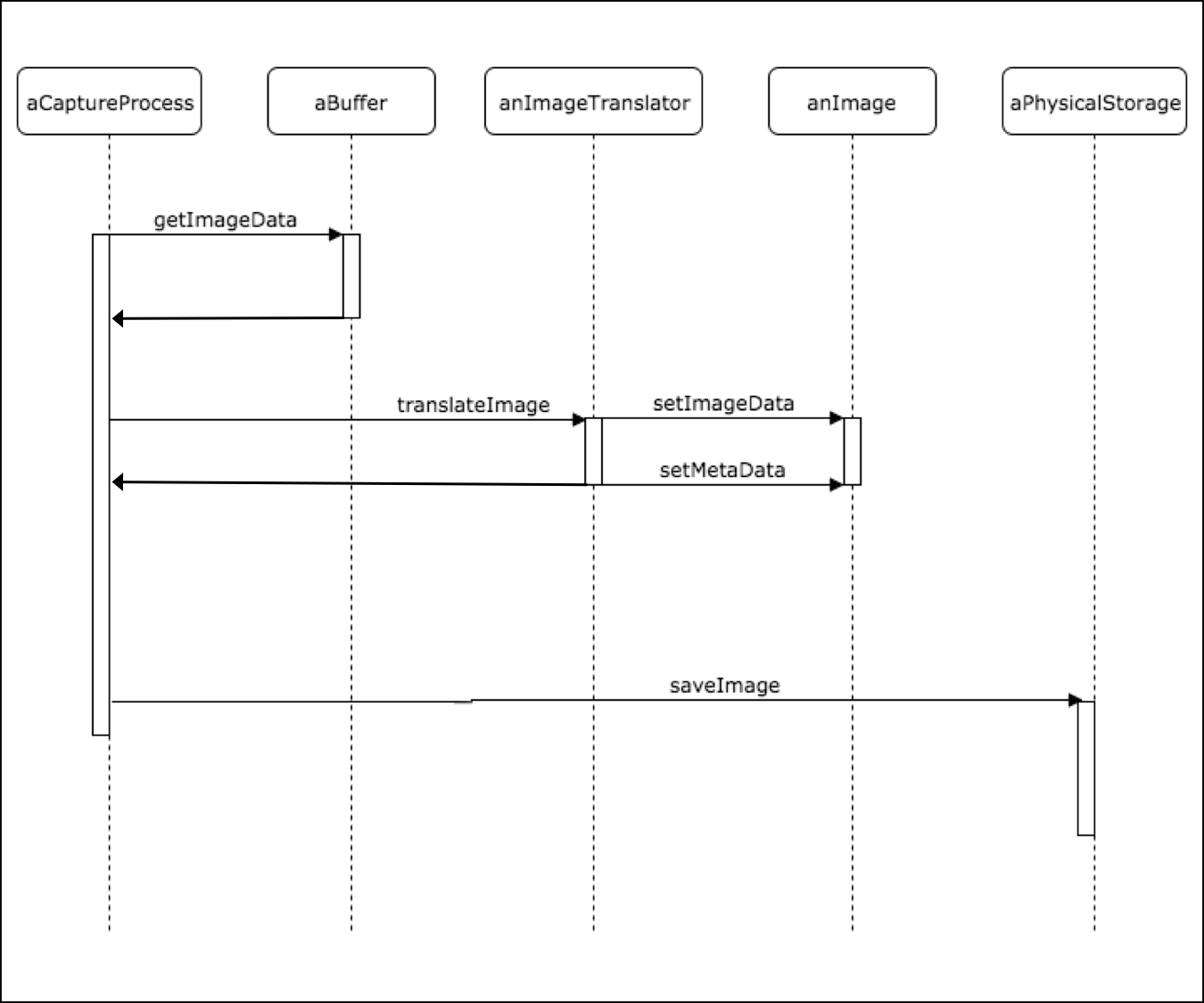

The problem domain for this module can be decomposed into objects from the domain. As seen in Figure 8, the Image Processing problem can be broken into the following objects:

- Buffer - this object encapsulates the raw image data.

- ImageTranslator - this object encapsulates the data and operations for converting raw image data to the JPEG format and performs basic edits.

- Image - this objects encapsulate the data and operations of the converted JPEG image and the image’s metadata.

- PhysicalStorage - this object encapsulates the data and operations of the saving the image to physical storage.

- CaptureProcess - this object encapsulates the data and operations required to respond to the captureImage event, mutate the representation and invoke operations of the other objects in the modules.

(Figure 8) A Class Diagram outlining the structure of the Image Processor module

When the operator of the camera depresses the shutter button all the way down, the Controller Module will ensure that the Equipment Operator Module has completed its work. The Controller Module will then raise the captureImage event. The Image Process Module, having registered for this event is invoked. As seen in Figure 9, when the CaptureProcess object responds to the captureImage event, it creates a Buffer object with the raw image data. The Buffer object containing the raw image data is passed into the ImageTranslator object where it is converted to the JPEG format. The ImageTranslator object also performs basic edits such as removing red eyes for the raw image data. After the raw image data is converted to the JPEG format it is packaged, along with the image metadata into an Image object. The ImageTranslator object then passes the Image Object to the PhysicalStorage object where it is persisted to physical storage.

(Figure 9) Image Processor Module Sequence Diagram

Module 3 - Controller

This module is responsible for providing an eventing system through which the other modules of the system are invoked. The controller module serves as the command and control center for the invocation of the Equipment Operator and Image Processor Modules.

Design Element - DE-3

The Controller Module will be rooted in the Event-Driven/Publish-Subscribe (Pub-Sub) architectural style. The controller object will publish and raise the following events:

- setEquipment - This event notifies the other modules of the system that the camera operator intends to capture an image and needs the camera to be adjusted based on the amount of light in the environment and distance between the camera and the subject of the photograph.

- captureImage - This event notifies the other modules of the system that the camera has been adjusted to ensure a quality image and that the camera operator wants to capture an image.

The Controller Module does not care what other module or modules in the system may respond to the events that is raises. It is only concerned with informing the other modules that some action needs to be taken. The Controller Module accomplishes this by publishing the setEquipment and captureImage events. The Controller Module allows other modules in the system that are interested in these events to subscribe to them. Each time the Controller Module raises one of these events, it notifies each subscriber so that they may respond. The Controller Module will only publish events when the camera is on and in the automatic mode. When the camera is on and not in automatic mode, full control of the camera is given to the camera operator.

Traceability Matrix

| Functional Requirements | Category | Requirement ID | Design ID |

| Operational | FR-1 | DE-3 | |

| Operational | FR-2 | DE-3 | |

| Operational | FR-3 | DE-1 | |

| Operational | FR-4 | DE-1 | |

| Operational | FR-5 | DE-1 | |

| Operational | FR-6 | DE-1 | |

| Image Handling | FR-7 | DE-2 | |

| Image Handling | FR-8 | DE-2 | |

| Image Handling | FR-9 | DE-2 | |

| Operational | FR-10 | DE-12 | |

| Non-Functional Requirements | Operational | NFR-1 | DE-1, DE-2, DE-3 |

| Operational | NFR-2 | DE-3 | |

| Operational | NFR-3 | DE-3 | |

| Operational | NFR-4 | DE-4 | |

| Operational | NFR-5 | DE-1 | |

| Operational | NFR-6 | DE-3 | |

| Image Handling | NFR-7 | DE-2 | |

| Image Handling | NFR-8 | DE-2 | |

| Operational | NFR-9 | DE-1 | |

| Image Handling | NFR-10 | DE-2 |

Design Rationale

Camera software is a complex combination of (often modular) physical inputs, data processing, and physical outputs. For the purposes of our design, we will be analyzing different architectures against the following functions of the camera when an image is captured: 1) adjusting the settings before taking the photo, 2) triggering the photo to be taken, 3) processing the image(s) in the buffer, and 4) writing the image(s) to the external storage device. In addition, the architectures will need to conform well to the requirements of taking photos in both automatic (FR-1) and manual mode (FR-2), all while adapting to the incorporation of various modular components, such as lenses (NFR-5), GPS, and more. In order to utilize external modular components, the system must default to using the external component rather than the onboard equivalent (FR-10).

The aforementioned functions align as subsets of the three modules above and as combinations of the various Functional and Nonfunctional Requirements listed above. Module 1 (Equipment Operator) contains the Setting Adjustment function and the part of the Shutter Trigger function responsible for the physical manipulation of the shutter. Module 2 (Image Processor) contains the Image Processing and Writing to Storage functions. Module 3 (Controller), contains the event portion of the Shutter Trigger function that coordinates the events throughout the various modules. Due to the nature of embedded systems, the architectures do not necessarily need to be very extensible as one of their key attributes. In addition, speed is paramount in this system, so architectures emphasizing performance will be given higher priority.

Function 1: Setting Adjustment

The first step of taking a photo is the adjustment of the various camera settings, such as shutter speed, aperture, focus, focal length (zoom), flash activation, and light sensitivity (ISO). In the “manual” use case (FR-2), this action is quite straight-forward. Since the camera operator determines the value assigned to each setting, the software only needs to read the values from each respective component. However, the “automatic” use case (FR-1) is significantly more complicated and will have a much greater impact on the overall architecture of this first function. In “automatic” mode, the camera must use a variety of sensors to detect the current settings of each component and then determine the outcome of the photo if taken with the current settings. If the photo is underexposed, overexposed, blurry, in low-light conditions, etc., the camera software must determine ideal values for each component in order to create the most optimal photo. The camera must also, assuming each component is compatible, use physical mechanisms to interact with its components. For example, the camera must interact with the lens to adjust the aperture (FR-4) and focus (FR-3), which are attributes that have to be physically set. The shutter speed must also be determined automatically by the system based upon the amount of light on the sensor (FR-6). In a bright environment, the shutter speed will be fast; conversely, in a dark environment, the shutter speed will be slow as to allow more light to hit the sensor. In addition to being able to adjust these settings to create an optimal photo, the camera must be able to perform all of these actions at once, and quickly as to not prevent the photographer from achieving the desired shot. From this perspective, the asynchronous coordination of each component in timely fashion is arguably the most important feature that an architecture should be able to provide.

The aforementioned architectural requirement restricts several styles that will not allow for the quick adjustment of these settings. A few styles stand out as being potentially well suited to this requirement, such as the Process Control solution style, the Shared Memory design, and the Event-Driven design. For the “automatic” use case, the Process Control solution style would allow a variety of sensors to be read and then determine a set point that will result in the best photo. This process is, by design, continuous and allows for frequent fluctuations of variables in the environment. The Shared Memory design has the benefit of being efficient and fast, but does not necessarily align with the use case. Since we are reading data from different sensors and using the data to determine ideal values for the image, there is no instance where memory would need to be shared. This would just add unnecessary complexity to the system. When considering an Event-Driven design, the fact that the component raising the event has no control over how other components will respond, or even when they respond makes it particularly unsuitable for a module that requires sequenced processing. In addition, from the perspective of adjusting settings, it is not very efficient to publish an event to all subscribers every time the light level in the environment changes slightly. Based on the the above analysis, the Process Control architectural style seems best suited to the task of settings adjustment.

Function 2: Triggering the Shutter

The act of triggering the shutter (the part of the camera that is actually responsible for taking the photo) is arguably the most critical part of the entire system. In many respects, it is the piece of the system that controls the use of other components in the system as described in Module 3 (Controller). While the adjustment of settings must take place quickly, it is the triggering of the shutter that should have the least amount of latency. The standard amount of latency for a mid-range, consumer DSLR is roughly 100ms or less (NFR-9). With this in mind, reducing the latency between the action triggering the shutter and the shutter activating is an aspect of the design that needs to be hoisted into the architecture wherever possible. Hoisting low latency into the architecture will ensure that the camera functions quickly above all else. It is not enough, however, to simply take the photo immediately after the camera operator presses the shutter button. In automatic mode, if the camera operator presses the shutter button when the current settings would cause the photo to be significantly overexposed (white) or underexposed (black), then the photo should not be taken at all.

To accomplish this, the system needs to evaluate whether or not the settings are conducive to a quality photo. The Setting Adjustment function will need to notify the system when the settings are appropriate for a photo to be taken. At this point, the camera operator still needs to fully press the shutter button (half-press initiating the Setting Adjustment function). Both events need to be asynchronous and resolve before triggering the shutter. When this occurs, all components in the camera need to respond in a coordinated manner to produce an optimal image. Since the settings being ready and the shutter button press can certainly be described as significant state changes in the system, the Event-Driven architecture comes to mind immediately. In addition, the need for concurrency would seem to fit well with the Shared Data design. The Event-Driven architecture is easily extensible, allowing the addition of new components which can be added to the subscriber list to listen for the shutter activation event. The Shared Data design is easily extensible as well, since new components need only reference the shared data elements when created or added to the system. In these regards, the two designs are on relatively even footing. However, where the Event-Driven architecture surpasses the Shared Data design is its ability to respond to specific events without being as resource intensive - nor as inefficient - as a recurring check against the shared data model would be.

The other portion of this function is the physical activation of the shutter in order to take the photo (Module 1 - Equipment Operator). This piece partially overlaps the Setting Adjustment function in that the shutter speed must either be set by the camera operator in manual mode, or by the system in automatic mode. Since the system sets the shutter speed based on the amount of light in the environment, a Process Control system similar to the Setting Adjustment function makes the most sense for the same reasons listed above.

Function 3: Image Processing

After the shutter has been activated and the picture taken, the camera must apply any default image processing to the photos (FR-9) stored in the buffer (NFR-9). For example, most cameras have a “noise reduction” feature that helps eliminate the graininess of photos, particularly when a high ISO (light sensitivity) is used (FR-9.2). In addition, many DSLRs have image editing abilities, such as the application of black and white filters (FR-9.1). These edits can be applied before the image is written to storage, or after, when the camera operator is reviewing their photos. In this manner, the image processing component should be reusable. Once the edits are complete, they are written to the buffer (NFR-8) to be written to the SD card in the event that the image processing completes faster than the SD card is able to handle. This situation may seem unlikely, but the speed with which files can be written to the SD card is contingent upon the “class” of the SD card, ranging from Class 2 (slowest - min. 2 MB/s write) to Class 10 (fastest - min. 10 MB/s write).

The Image Processing function should be structured in such a way that new processing components can be added or removed based on user preference. For example, the camera operator should be able to specify that noise-reduction, a black and white filter, and JPEG format should all be applied to the resulting picture. This requirement lends itself to more extensible architectures, like Pipe-and-Filter and Object-Oriented. Other architectures, such as Event-Driven, are certainly extensible as well. However, since the image can only have one form of processing applied at a time, there is no sense in choosing a design that is more complex and better geared to asynchronous uses when it would ultimately be implemented in a sequential manner. The Pipe-and-Filter architecture has certain other drawbacks, such as the fact that it is rather computationally inefficient since the data as a whole has to be copied to each component and then modified. Since a camera needs to process images as quickly as possible, and ideally with minimal computing power, the Pipe-and-Filter architecture is too resource intensive to be viable. The Object-Oriented design, however, would only require the data to be held in memory in one location, upon which all changes would be made directly. In addition, each processing component of the Image Processor is logically separated from the rest of the components, allowing for modularity. Being able to treat images as objects also has the added benefits of not only being easily comprehended, but also allows each image to share or have the same structure. Certain attributes in the metadata will always be present, so they can be set by the system or left as null.

Function 4: Writing to Storage

Finally, after all the other functions have been performed, the image must be stored on the SD card (FR-7). Functionally, the camera must be able to take several photos in short succession - faster than the camera can write to the SD card. Because of this limitation, there must be a buffer to hold the image data while the series of photos is written to the SD card (NFR-8). The data written to the buffer will be in RAW image format (a minimally processed form of image data). At the point in time that the camera writes the data from the buffer onto the SD card, the image may be stored in the RAW image format, or JPEG format (a compressed, processed form) based on the camera operator’s settings (NFR-10). The process of writing the information from the buffer to the SD card will take no more than one second per image (NFR-7). Information from various camera components (either onboard or external) must be aggregated and stored as image metadata. This data will contain information about the system’s settings when the image was captured, a timestamp, and location data if a GPS component is present (FR-8).

Due to the limitation of the SD card to have more than one image written at a time, a more sequential architectural style must be considered. Since writing data to the SD card is heavily contingent upon the Image Processing function and are both subsets of the Image Processor module, the Object-Oriented design will persist to the writing of the data to the SD card. Any change in architecture with the management of the data would take time and processing power, both of which need to be minimized in the context of an embedded system such as a DSLR camera.

Conclusion

Systems with multiple function types - in this case, interfacing with physical components and data processing elements - often need to rely on the use of multiple architectural styles to create the most robust and efficient design. In the case of embedded systems, certain limitations exist regarding processing power and memory which must be accounted for as well. For a system as varied in function as a DSLR camera - and other embedded systems - it would be unreasonable to assume that all functions could be completed effectively and efficiently by one overarching architectural style. Because of this, we chose our overarching architectural style to be an Event-Driven design to coordinate certain events with physical component interaction, as well as software functions. Within the hardware module of the system (Equipment Operator), a Process Control design was used to account for the myriad changing variables in the environment in order to produce an optimal image in automatic mode. Within the software module of the system (Image Processor), an Object-Oriented design was used to apply a consistent structure to the processed images, as well as to allow for interchangeability of certain image processing features such as noise-reduction. By combining these various architectural styles, our overall system design is one that is efficient, responsive, and well-suited to an embedded system environment.