How do I interpret Helgrind's output?

Helgrind, being part of the valgrind suite of tools, relies on binary instrumentation.

Therefore, it doesnt need access to your source code, but unfortunately it also

has a somewhat limited understanding of your source program, which can make deciphering

its output a bit challenging, or at least will require going back and forth between

the output and your source code. Make sure you've compiled your code with -g to

obtain line number information before running Helgrind.

Below, I pick apart a typical message shown by Helgrind:

After starting a program, Helgrind shows

valgrind --tool=helgrind ./threadpool_test4

==2989522== Helgrind, a thread error detector

==2989522== Copyright (C) 2007-2017, and GNU GPL'd, by OpenWorks LLP et al.

==2989522== Using Valgrind-3.18.1 and LibVEX; rerun with -h for copyright info

==2989522== Command: ./threadpool_test4

==2989522==

starting 40 tasks...

Then, when it detects the first problem, it'll say:

==2989522== ---Thread-Announcement------------------------------------------

==2989522==

==2989522== Thread #2 was created

==2989522== at 0x5DC9D72: clone (in /usr/lib64/libc-2.28.so)

==2989522== by 0x5B7708E: create_thread (in /usr/lib64/libpthread-2.28.so)

==2989522== by 0x5B78A45: pthread_create@@GLIBC_2.2.5 (in /usr/lib64/libpthread-2.28.so)

==2989522== by 0x4C417A0: pthread_create_WRK (hg_intercepts.c:445)

==2989522== by 0x4C42CAF: pthread_create@* (hg_intercepts.c:478)

==2989522== by 0x402D99: thread_pool_new (threadpool.c:72)

==2989522== by 0x4017A4: run_test (threadpool_test4.c:52)

==2989522== by 0x402A35: main (threadpool_test4.c:142)

==2989522==

This information tells you what Thread #2 is. Helgrind identifies it by

where it was created: here, on line 72 in threadpool.c.

(If multiple threads were created on this line, then unfortunately it won't

tell you which one.)

Then:

==2989522== ---Thread-Announcement------------------------------------------

==2989522==

==2989522== Thread #1 is the program's root thread

==2989522==

==2989522== ----------------------------------------------------------------

This tells you that Thread #1 is the root, or main thread. That's the

thread that called main (you didn't create it).

Next, Helgrind will tell you about a pthread_mutex_t, or lock, which

will play a role. It is identified by its address, 0x6EA1EE0, and

since Helgrind doesn't understand your program it can help you identify

which lock that is only by providing 2 pieces of information it does have,

namely first when it was first initialized as a lock, and second information

about where the memory that stores the lock was allocated:

==2989522== ---Thread-Announcement------------------------------------------

==2989522==

==2989522== Lock at 0x6EA1EE0 was first observed

==2989522== at 0x4C4300E: pthread_mutex_init (hg_intercepts.c:818)

==2989522== by 0x402B3D: thread_pool_new (threadpool.c:60)

==2989522== by 0x4017A4: run_test (threadpool_test4.c:52)

==2989522== by 0x402A35: main (threadpool_test4.c:142)

==2989522== Address 0x6ea1ee0 is 64 bytes inside a block of size 160 alloc'd

==2989522== at 0x4C38FC5: malloc (vg_replace_malloc.c:381)

==2989522== by 0x402A52: thread_pool_new (threadpool.c:57)

==2989522== by 0x4017A4: run_test (threadpool_test4.c:52)

==2989522== by 0x402A35: main (threadpool_test4.c:142)

==2989522== Block was alloc'd by thread #1

This says that the lock 0x6EA1EE0 was initialized on line 60 in threadpool.c inside

the thread_pool_new function. The memory where the lock is stored was

allocated via malloc on line 57 when this function was called by thread #1,

the main thread.

The allocation on line 57 was probably for a larger struct that had a

pthread_mutex_t field. This field was 64 bytes inside the struct, maybe like

so:

struct {

... other data taking 64 bytes

pthread_mutex_t lock;

...

}

With these pieces of information, you can now look into your source code

and identify which lock Helgrind is talking about when it refers to

lock 0x6EA1EE0.

Similarly, there is a second lock Helgrind spotted, at 0x6EA2950

==2989522==

==2989522== Lock at 0x6EA2950 was first observed

==2989522== at 0x4C4300E: pthread_mutex_init (hg_intercepts.c:818)

==2989522== by 0x404D97: thread_pool_submit (threadpool.c:287)

==2989522== by 0x401AD7: run_test (threadpool_test4.c:60)

==2989522== by 0x402A35: main (threadpool_test4.c:142)

==2989522== Address 0x6ea2950 is 112 bytes inside a block of size 152 alloc'd

==2989522== at 0x4C38FC5: malloc (vg_replace_malloc.c:381)

==2989522== by 0x404B39: thread_pool_submit (threadpool.c:281)

==2989522== by 0x401AD7: run_test (threadpool_test4.c:60)

==2989522== by 0x402A35: main (threadpool_test4.c:142)

==2989522== Block was alloc'd by thread #1

==2989522==

This lock is inside whatever struct or array was allocated on line 281 in

thread_pool_submit and it was initialized on line 287. This lock

is a different lock (because it's at a different address.)

Now, Helgrind is ready to tell you about the actual data race. A data race, as you recall, are 2 conflicting accesses to some memory location, at least one of which is a write, and there's no happens-before relationship. Because happens-before relationships are often (though not always) enforced by using locks, Helgrind will tell you about the locks that were held when the data race was observed.

Valgrind tells you about the first access involved here:

==2989522== Possible data race during write of size 4 at 0x6EA2908 by thread #2

==2989522== Locks held: 1, at address 0x6EA1EE0

==2989522== at 0x403660: start_routine (threadpool.c:137)

==2989522== by 0x4C41998: mythread_wrapper (hg_intercepts.c:406)

==2989522== by 0x5B781CE: start_thread (in /usr/lib64/libpthread-2.28.so)

==2989522== by 0x5DC9D82: clone (in /usr/lib64/libc-2.28.so)

==2989522==

A "write of size 4 at 0x6EA2908" could be, for instance, an

assignment to an integer (4-byte) variable. It occurs on

line 137 in the function start_routine in thread #2 (defined

above).

Since a data race involves at least 2 accesses, Helgrind tells you about one other access involved:

==2989522== This conflicts with a previous read of size 4 by thread #1

==2989522== Locks held: 1, at address 0x6EA2950

==2989522== at 0x4053EF: future_get (threadpool.c:322)

==2989522== by 0x401C35: run_test (threadpool_test4.c:64)

==2989522== by 0x402A35: main (threadpool_test4.c:142)

This other access was a read on line 322 in future_get in threadpool.c.

It referred to the same address (which is why the address isn't listed again).

Lastly, Helgrind tells you what it knows about address 0x6ea2908:

it's inside a block of memory (likely a struct) you allocated

on line 281 in thread_pool_submit:

==2989522== Address 0x6ea2908 is 40 bytes inside a block of size 152 alloc'd

==2989522== at 0x4C38FC5: malloc (vg_replace_malloc.c:381)

==2989522== by 0x404B39: thread_pool_submit (threadpool.c:281)

==2989522== by 0x401AD7: run_test (threadpool_test4.c:60)

==2989522== by 0x402A35: main (threadpool_test4.c:142)

==2989522== Block was alloc'd by thread #1

==2989522==

==2989522== ----------------------------------------------------------------

Again, "40 bytes inside a block" means that it's inside a struct.

If it isn't clear which field it is (that is, if there are multiple accesses

on lines 137 and 322, then you can either rearrange your code to have only

one access per line, or count out the size of the fields inside the struct

to identify offset 40. Or use offsetof().

In 95% of cases, however, the offending field will be clear.

Now that Helgrind told you about the data race, what can you infer? Your code violated a basic principle of multithreaded programming, which is that of data race freedom. You wrote to and read from a shared variable but you didn't have a lock to protect this variable.

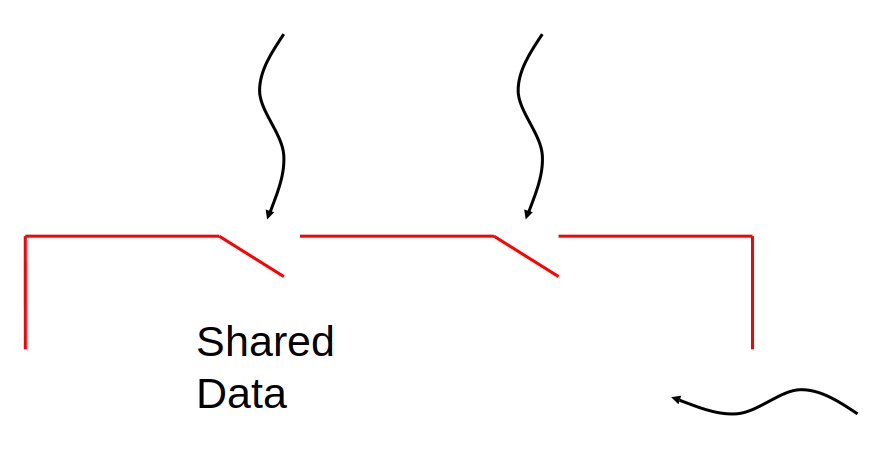

Helgrind found that instead of using 1 lock to protect this variable, you may have tried to use 2 locks: one lock was used in an attempt to protect the first access, but a different lock was used for the second access. This renders these locks useless. The lecture slides on locking illustrate this mistake:

It's as if you tried to protect access to a room by with 2 lockable doors instead of one. One thread can enter through one door, and even if that thread locks the door behind it, another thread can enter through the other door and then both threads' accesses will interfere with one another.