What is false sharing and how do we avoid it

When multiple threads share a variable in memory and read and/or write to it, the variable's value must be read into the cache of the CPU on which the threads execute. If all threads read, a shared, read-only copy of the variable can be kept in all caches. However, when one thread writes, it requires an exclusive copy which necessitates that all other CPU's caches be invalidated. This is one of the overheads of sharing variables in shared memory. If this is done frequently, the resulting cache coherency traffic can lead to significant slowdown in the speed of these memory accesses.

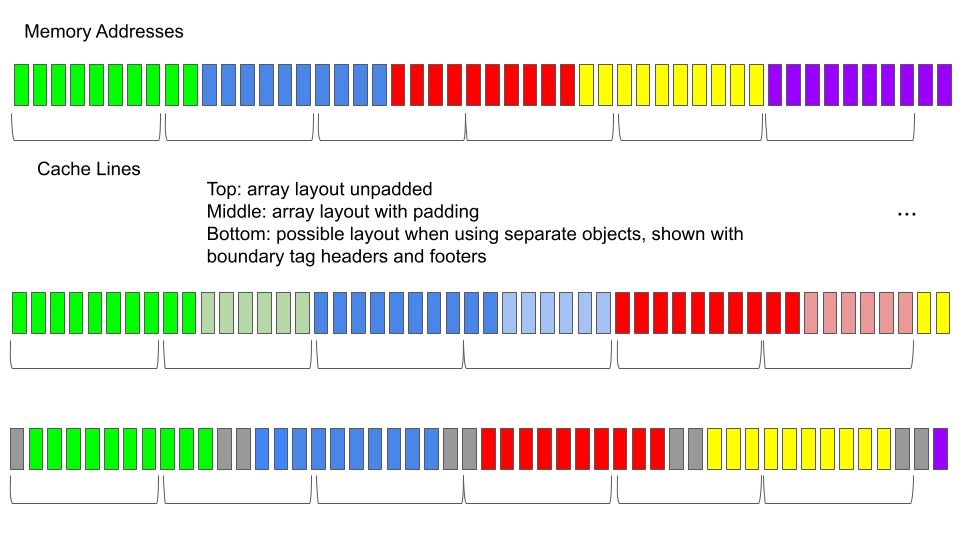

However, caches have no notion of variables - they operate using cache lines (typically 64 bytes large). When variables are located within the same cache line, then even if those variables are not shared, cache coherency traffic may result. This is known as false sharing.

For instance, in the figure below, an array of identical

structs is shown. This array may have been allocated with a single

call to malloc - it occupies a contiguous region of memory

addresses. The bottom part of the first element (green) shares

a cache line with the top part of the second element (blue).

Therefore, even if one thread accesses only the green element,

and another only the blue element, because of the overlap in

terms of cache lines, sharing overhead may result.

A solution is shown in the middle: by padding the data structures (adding some padding bytes that are never accessed, shown in light-green/light-blue), it is guaranteed that distinct elements are located in disjoint cache lines.

At the bottom, we show the situation when different objects may be separately allocated. In this case, there may be bytes between them (for headers + footers used by the memory allocator), but it is still possible for false sharing to occur, which in turn can also be avoided using padding.

To pad a struct, add padding like so:

struct yourstruct {

... your fields ...

char pad[50]; // add 50 bytes of padding

};